Table Of Content

A data lakehouse is a data platform, which merges the best aspects of data warehouses and data lakes into one data management solution. Data warehouses tend to be more performant than data lakes, but they can be more expensive and limited in their ability to scale. A data lakehouse attempts to solve for this by leveraging cloud object storage to store a broader range of data types—that is, structured data, unstructured data and semi-structured data. As previously noted, data lakehouses combine the best features within data warehousing with the most optimal ones within data lakes.

Common Two-Tier Data Architecture

A data warehouse gathers raw data from multiple sources into a central repository and organizes it into a relational database infrastructure. This data management system primarily supports data analytics and business intelligence applications, such as enterprise reporting. The system uses ETL processes to extract, transform, and load data to its destination. However, it is limited by its inefficiency and cost, particularly as the number of data sources and quantity of data grow over time. An Azure data lake includes scalable, cloud data storage and analytics services.

What is a Data Lakehouse?

Azure Data Lake Storage enables organizations to store data of any size, format and speed for a wide variety of processing, analytics and data science use cases. When used with other Azure services — such as Azure Databricks — Azure Data Lake Storage is a far more cost-effective way to store and retrieve data across your entire organization. Since data lakehouses emerged from the challenges of both data warehouses and data lakes, it’s worth defining these different data repositories and understanding how they differ. Data lakehouses seek to resolve the core challenges across both data warehouses and data lakes to yield a more ideal data management solution for organizations.

Future-Proofing Clinical Data Infrastructure: The Evolution Towards a Data Lakehouse Architecture - Solutions Review

Future-Proofing Clinical Data Infrastructure: The Evolution Towards a Data Lakehouse Architecture.

Posted: Fri, 13 Oct 2023 07:00:00 GMT [source]

What is the difference between an Azure data lake and an Azure data warehouse?

Consistency is when data is in a consistent state when a transaction starts and when it ends. Isolation refers to the intermediate state of transaction being invisible to other transactions. Durability is after a transaction successfully completes, changes to data persist and are not undone, even in the event of a system failure.

Linkedin To Open Source Its Data Lakehouse Management Tool OpenHouse - Datanami

Linkedin To Open Source Its Data Lakehouse Management Tool OpenHouse.

Posted: Mon, 04 Mar 2024 08:00:00 GMT [source]

However, coordinating these systems to provide reliable data can be costly in both time and resources. Long processing times contribute to data staleness and additional layers of ETL introduce more risk to data quality. Acute-on-chronic liver failure (ACLF) is a dynamic syndrome, and sequential assessments can reflect its prognosis more accurately. Our aim was to build and validate a new scoring system to predict short-term prognosis using baseline and dynamic data in ACLF. We conducted a retrospective cohort analysis of patients with ACLF from three different hospitals in China. To construct the model, we analyzed a training set of 541 patients from two hospitals.

We are committed to go as far as possible in curating our trips with care for the planet. That is why all of our trips are flightless in destination, fully carbon offset - and we have ambitious plans to be net zero in the very near future. Built on decades of innovation in data security, scalability, and availability, keep your applications and analytics protected, highly performant, and resilient, anywhere with IBM Db2.

Key Technology Enabling the Data Lakehouse

Learn about barriers to AI adoptions, particularly lack of AI governance and risk management solutions.

IBM Research proposes that the unified approach of data lakehouses creates a unique opportunity for unified data resiliency management. Managed Delta Lake in Azure Databricks provides a layer of reliability that enables you to curate, analyze and derive value from your data lake on the cloud. ACID stands for atomicity, consistency, isolation, and durability; all of which are key properties that define a transaction to ensure data integrity. Atomicity can be defined as all changes to data are performed as if they are a single operation.

What is an Azure data lake?

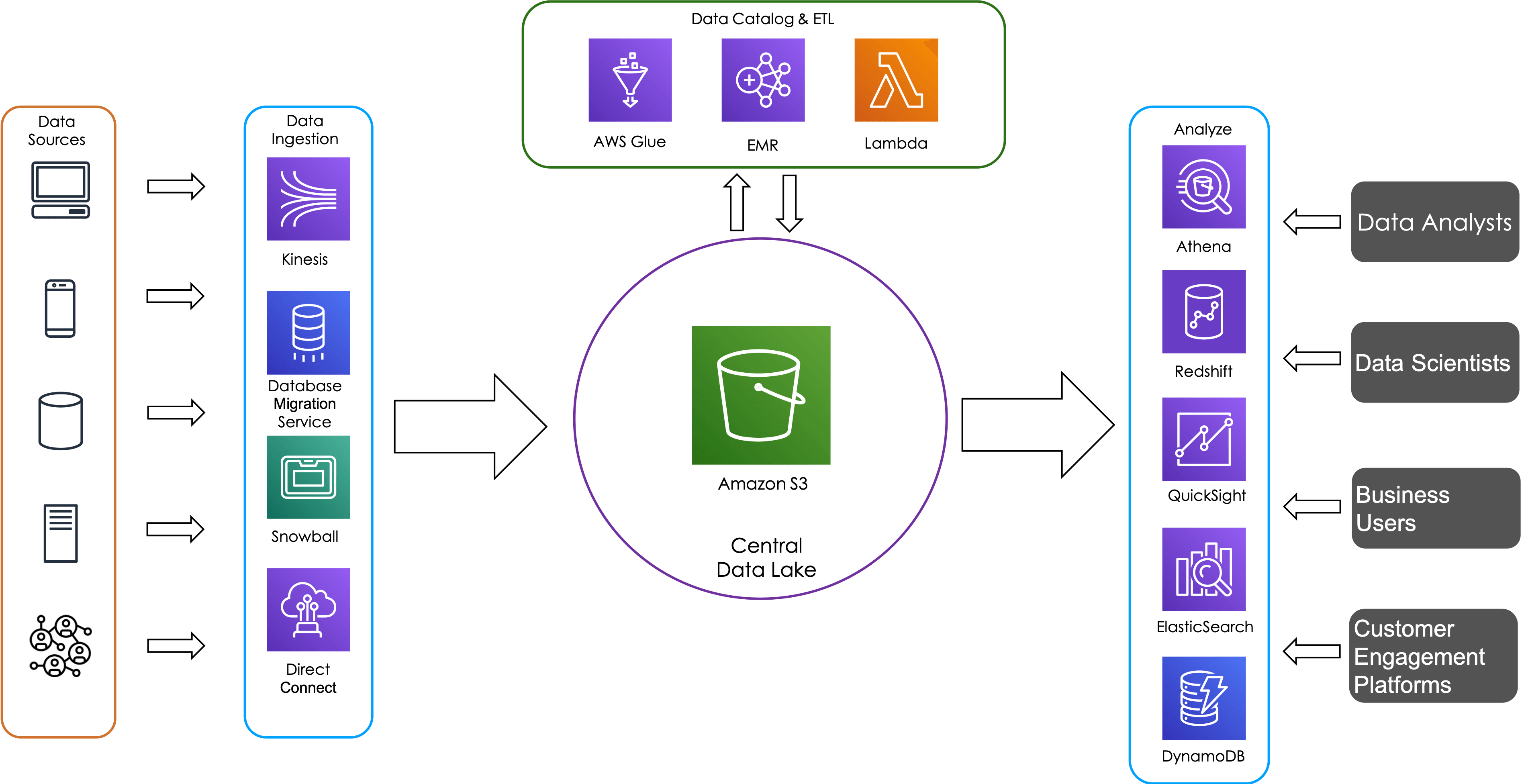

The data lakehouse optimizes for the flaws within data warehouses and data lakes to form a better data management system. It provides organizations with fast, low-cost storage for their enterprise data while also delivering enough flexibility to support both data analytics and machine learning workloads. Data teams consequently stitch these systems together to enable BI and ML across the data in both these systems, resulting in duplicate data, extra infrastructure cost, security challenges, and significant operational costs. In a two-tier data architecture, data is ETLd from the operational databases into a data lake. This lake stores the data from the entire enterprise in low-cost object storage and is stored in a format compatible with common machine learning tools but is often not organized and maintained well.

The unique ability to ingest raw data in a variety of formats — structured, unstructured and semi-structured — along with the other benefits mentioned makes a data lake the clear choice for data storage. They are known for their low cost and storage flexibility as they lack the predefined schemas of traditional data warehouses. The size and complexity of data lakes can require more technical resources, such as data scientists and data engineers, to navigate the amount of data that it stores. Additionally, since data governance is implemented more downstream in these systems, data lakes tend to be more prone to more data silos, which can subsequently evolve into a data swamp.

Learn about the fast and flexible open-source query engine available with watsonx.data’s open data lakehouse architecture. A data lakehouse uses APIs, to increase task processing and conduct more advanced analytics. Specifically, this layer gives consumers and/or developers the opportunity to use a range of languages and libraries, such as TensorFlow, on an abstract level. In this layer, the structured, unstructured, and semi-structured data is stored in open-source file formats, such as such as Parquet or Optimized Row Columnar (ORC). The real benefit of a lakehouse is the system’s ability to accept all data types at an affordable cost.

Structured Query Language (SQL) is a powerful querying language to explore your data and discover valuable insights. Delta Lake is an open source storage layer that brings reliability to data lakes with ACID transactions, scalable metadata handling and unified streaming and batch data processing. Delta Lake is fully compatible and brings reliability to your existing data lake.

It supports diverse data datasets, i.e. both structured and unstructured data, meeting the needs of both business intelligence and data science workstreams. It typically supports programming languages like Python, R, and high performance SQL. Data lakes are open format, so users avoid lock-in to a proprietary system like a data warehouse. Open standards and formats have become increasingly important in modern data architectures. Data lakes are also highly durable and low cost because of their ability to scale and leverage object storage. Additionally, advanced analytics and machine learning on unstructured data are some of the most strategic priorities for enterprises today.

No comments:

Post a Comment